I recently posted this tweet and it kinda blew up. I was not expecting it, since I didn't do anything special.

To give some context: this was for a legacy PHP application running on 5x t2.xlarge (8vCPUs, 32GB of RAM). Those are massive machines, and super oversized for the application's needs.

It was never a priority, so I never worked on it — until now.

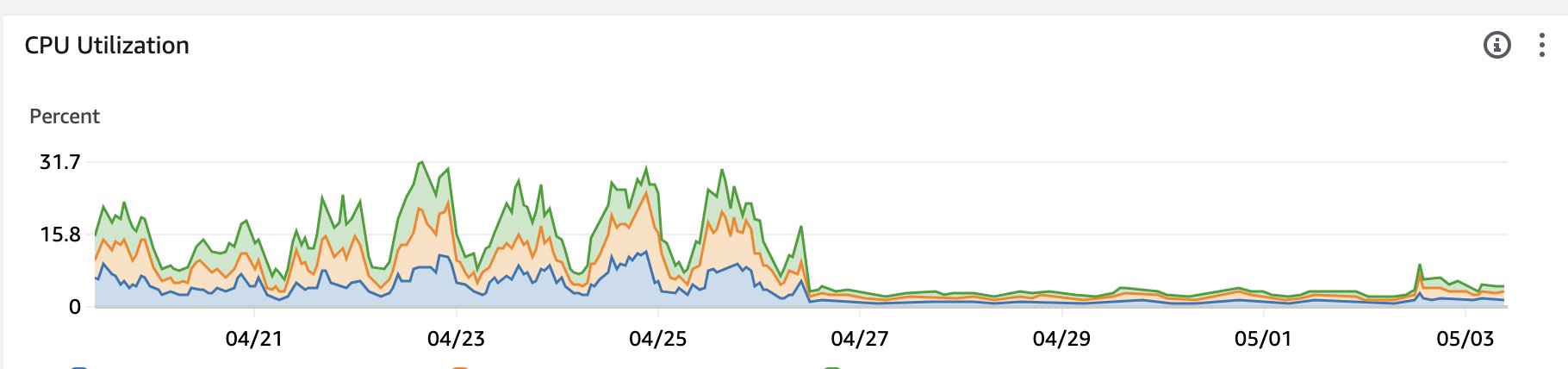

Monitoring showed us that the servers were using ~15% of CPU, up to ~30% when traffic increased, and that memory usage was pretty low. I knew why: php-fpm was never set up properly for these machines, and OPCache was disabled.

More context regarding the tweet

Pre-optimization

- Cluster: 5x t2.2xlarge

- Total vCPUS:

40 - Total memory:

320GB - Avg. CPU load during daytime: 15-20, peaking to 30%

- Avg. memory usage: ~2GB

- Avg. PHP execution time: 150ms

- OPCache: disabled

php-fpm config:

1pm.max_children = 1002pm.start_servers = 63pm.min_spare_servers = 44pm.max_spare_servers = 8Post-optimization

- Cluster: 2x t2.2xlarge

- Total vCPUS:

16 - Total memory:

64GB - Avg. CPU load during daytime:

~2% - Avg. memory usage:

~7GB - Avg. PHP execution time:

23ms - OPCache: enabled

php-fpm config:

1pm.max_children = 3002pm.start_servers = 1003pm.min_spare_servers = 604pm.max_spare_servers = 150

What is PHP-FPM?

PHP-FPM is the most widely used way to serve PHP applications. It is, essentially, a process manager.

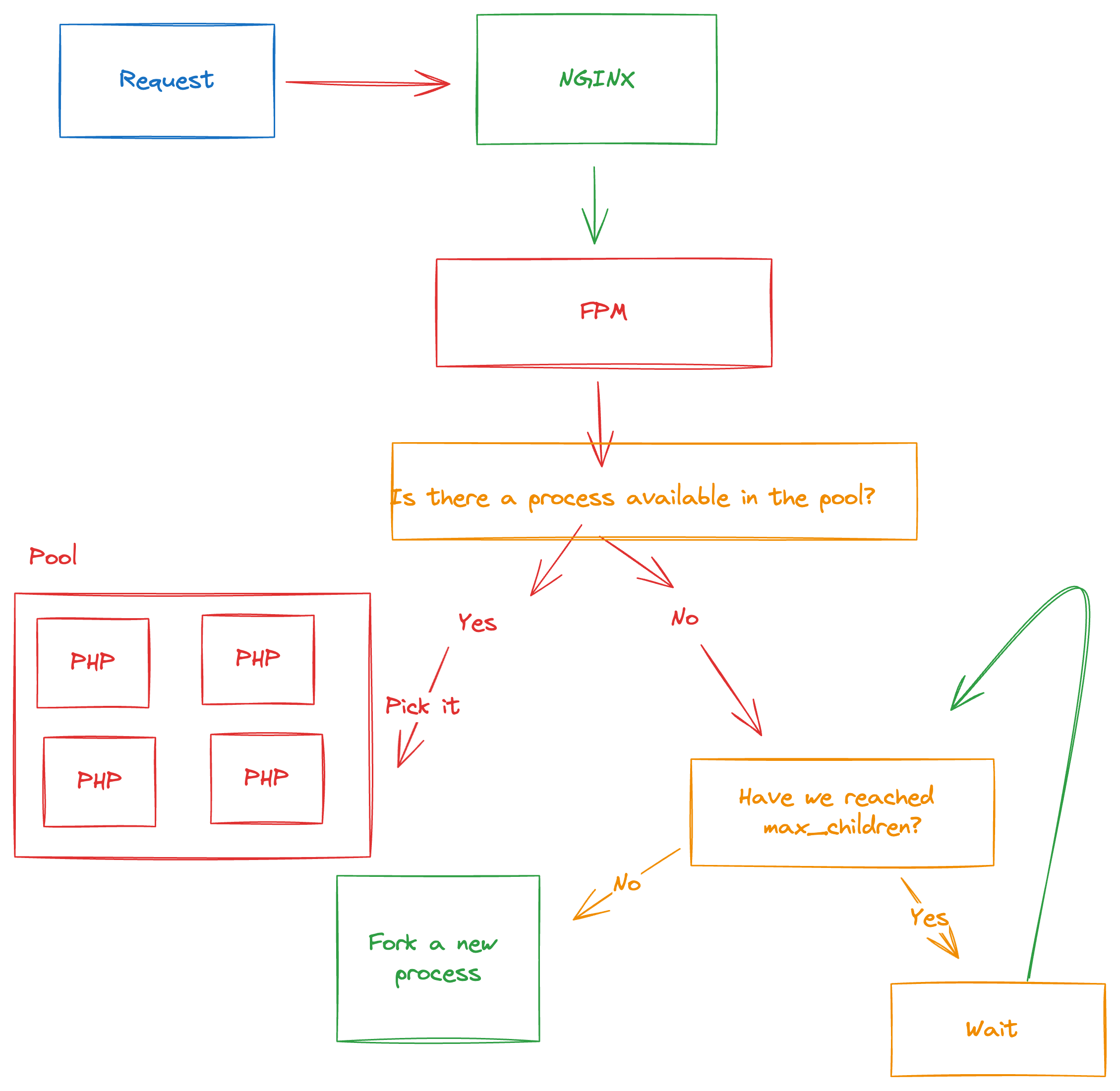

Most requests follow this flow:

Request -> NGINX -> php-fpm -> (pick or spawn a PHP process) -> execute code -> response

NGINX acts as a reverse proxy that talks to fpm through a socket — FPM is responsible for picking a process from a pool, or spawning a new process if there are no idle processes available (and if you're below the defined max_children value).

For example, imagine the following configuration:

- Max processes: 10

- Maximum pool size: 8

If you receive 8 simultaneous requests, php-fpm would simply pick idle processes from the pool. If you were to receive 10 requests, it'd pick the 8 idle processes and fork 2 extra.

Forking processes is expensive, but it's not the end of the world. We're going to come back to this.

What is OPCache?

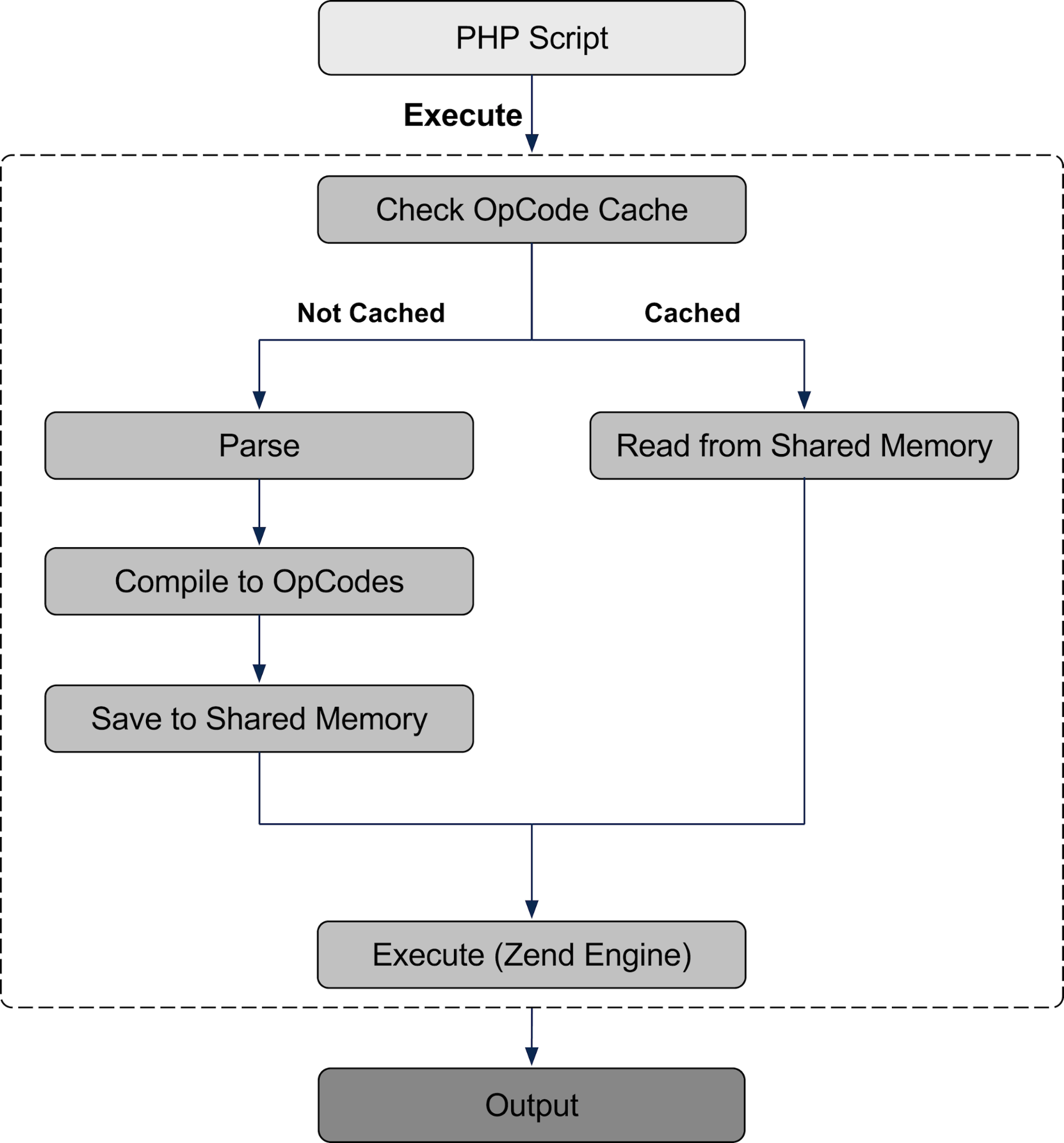

Put simply, OPCache is an opcode cache.

Uhhh... okay, what is an opcode?

An opcode is a low-level machine instruction. It tells the processor to do something. We don't need to get into this rabbit hole. Here's what happens when a PHP script is executed:

- The interpreter loads the script

- The script is parsed into a syntax tree

- That tree is converted into opcodes for the Zend Engine

- The Zend Engine executes these opcodes

- Output

When you have OPCache enabled, steps 2 and 3 are skipped:

- The interpreter loads the script

- The Zend Engine executes the cached opcodes

- Output

Obviously, if there's cache miss, all the steps will have to be executed. As you can imagine, caching these expensive operations provide a massive performance improvement, requiring less CPU cycles and reducing overall memory consumption.

Source: https://www.cloudways.com/blog/integrate-php-opcache/

Source: https://www.cloudways.com/blog/integrate-php-opcache/

Optimizing a PHP application

To test things, I set up a few machines on Digital Ocean:

- Test server:

4 vCPUs,8GB RAMrunning a simple Laravel application that reads and writes into the database - Load testing server: A simple server to make HTTP calls

- Database:

2 vCPUs,4GB RAM,MySQL.

Here's the code the Laravel application runs:

1<?php 2 3namespace App\Http\Controllers; 4 5use App\Models\Visit; 6 7class FooController 8{ 9 public function __invoke()10 {11 Visit::query()->create();12 13 return response()->json([14 'visits' => Visit::query()->count(),15 ]);16 }17}The app server was deployed using Forge.

Results

Let's start with the results.

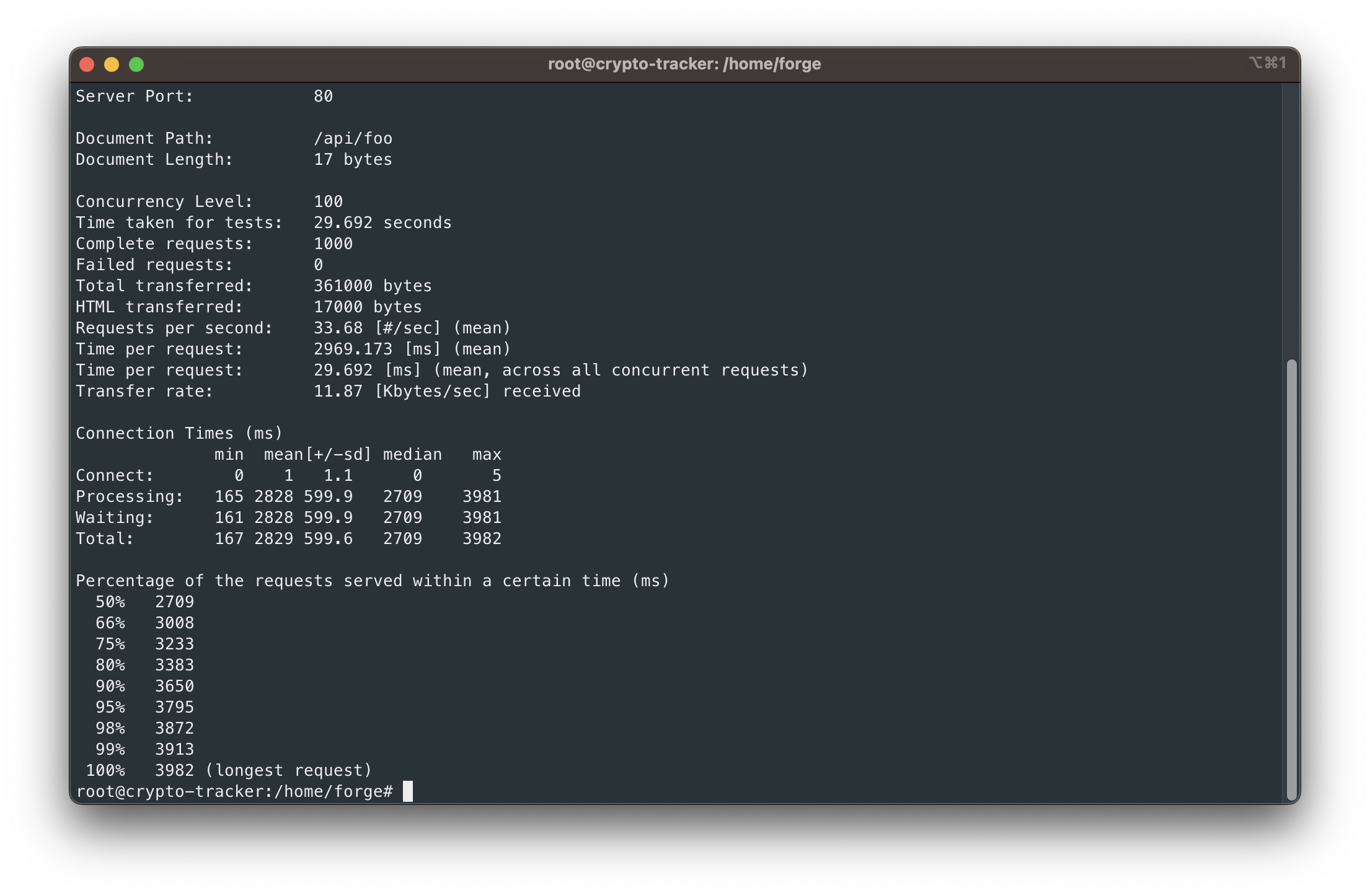

Initial Benchmark

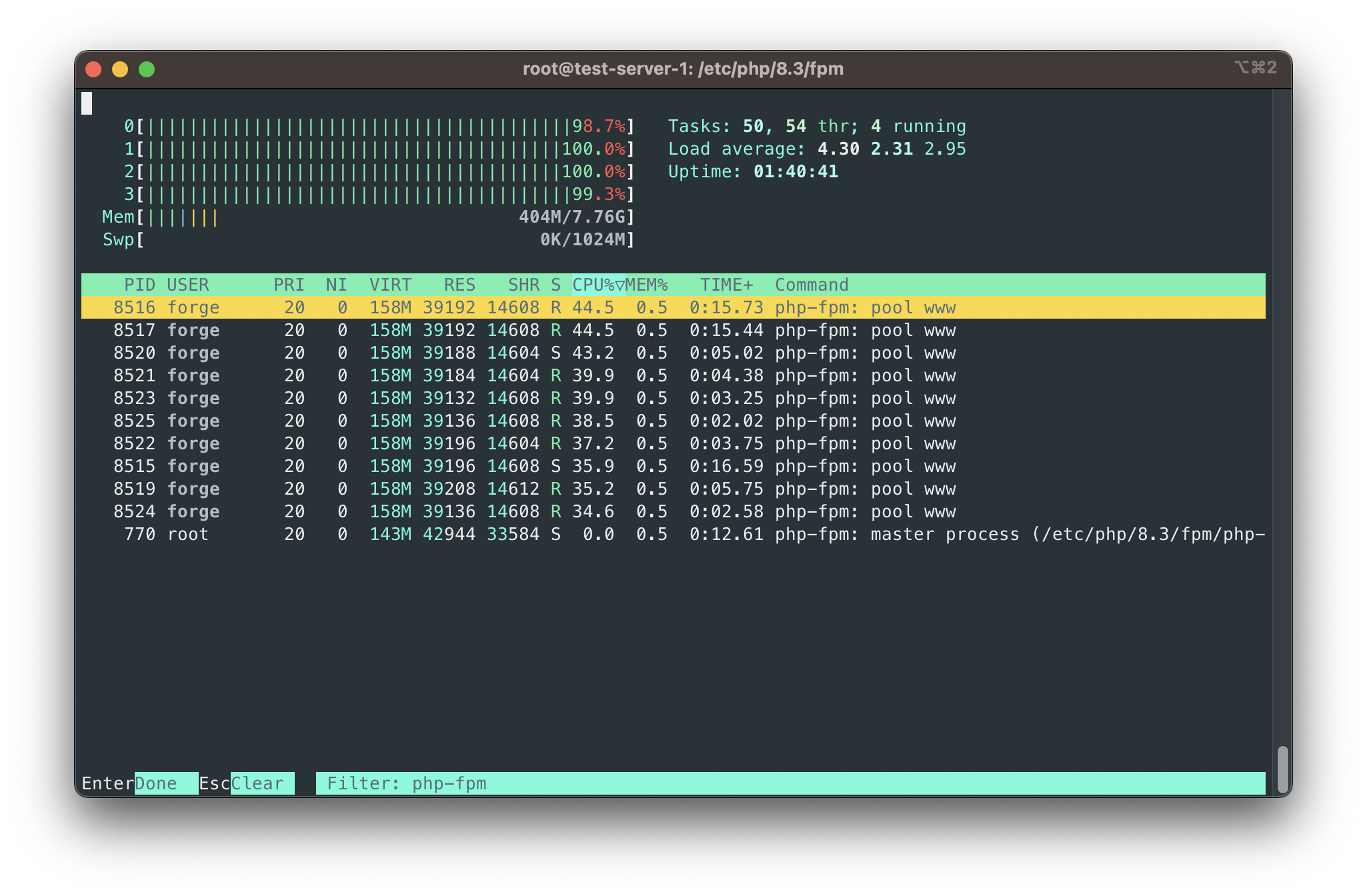

I ran a simple test (ab -n 1000 -c 10) and this is what I got: 33 reqs/s, and full load on the server's CPU. Pretty horrible, right?

After enabling OPCache, I got this:

119 reqs/s without nuking the server. Much better.

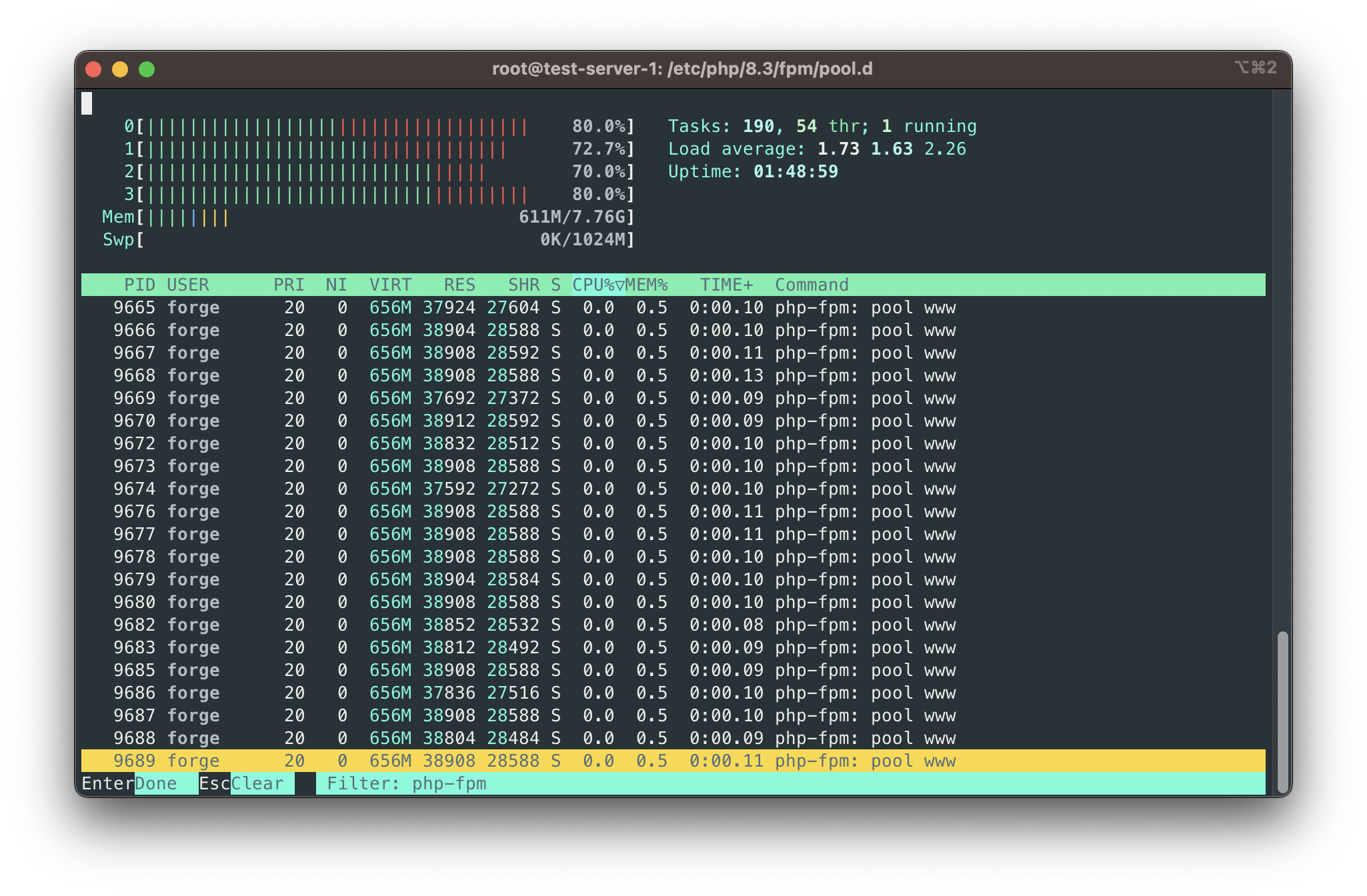

After tweaking php-fpm, I managed to get 310 reqs/s with the following peak load:

Sadly, I was not able to tweak to get close to 100% CPU usage constantly.

Sadly, I was not able to tweak to get close to 100% CPU usage constantly.

Enabling OPCache

Before tweaking FPM, I suggest enabling OPCache — otherwise, you'll have to tweak it again as CPU load and memory usage are going to change.

OPCache is distributed as an extension, so make sure you have it installed.

First, run php -v to see if you have it already:

1Copyright (c) The PHP Group2Zend Engine v4.2.8, Copyright (c) Zend Technologies3 with Xdebug v3.2.2, Copyright (c) 2002-2023, by Derick Rethans4 with Zend OPcache v8.2.8, Copyright (c), by Zend TechnologiesTo see if it is enabled, run php -i | grep opcache and look for opcache.enable:

1/etc/php/8.2/conf.d/10-opcache.ini,2opcache.blacklist_filename => no value => no value3opcache.consistency_checks => 0 => 04opcache.dups_fix => Off => Off5opcache.enable => Off => OffTo enable opcache, locate your php.ini file by running php --ini and looking for Loaded Configuration File

1cd /etc/php/8.22vim php.iniLook for [opcache] to locate the opcache settings and switch opcache.enable to 1.

There are other OPCache configurations that are important — opcache.max_accelerated_files is one of them: it dictates how many files are going to be cached, including your vendor folder.

Make sure to change it to a reasonable value based on your project.

Symfony has recommendations for OPCache settings.

Once you have turned OPCache on, make sure to reload your php-fpm service to respawn the php processes: service php-fpm reload.

P.S: by default, you should always reload php-fpm whenever you deploy new code. In case you don't want to do this, you can enable opcache.validate_timestamps, and OPCache will keep track of file changes, although it has a performance penalty.

OPCache should already give you a massive boost in performance. Try it out and see what happens.

Tuning PHP-FPM

Ideal PHP-FPM scenario

PHP-FPM offers the following configuration:

- Process manager type (

static,dynamic,ondemand - Max workers (

pm.max_children): the maximum amount of processes php-fpm is going to fork - Start processes (

pm.start_servers): How many processes are going to be forked when you start php-fpm - Minimum pool size (

pm.min_spare_servers): The lowest amount of processes in the pool - Maximum pool size (

pm.max_spare_servers): The highest amount of processes in the pool

Ideally, you want to have a configuration in which:

- You can utilize most of the server's memory

- You want to utilize most of the CPU

FPM usually ships like this:

1pm = dynamic2pm.max_children = 103pm.start_servers = 24pm.max_spare_servers = 35pm.min_spare_servers = 1As you can guess, that's probably not good. These settings mean that you can only handle up to 10 simultaneous requests (the others will hang until a process is available), and that if you get more than 3 requests, it'll need to spawn 7 new processes.

The intuitive way to set up FPM (and what most guides will tell you) is to calculate how much memory each process consumes, add some buffer, and divide the available memory in the system by that value. In our test, each process consumed ~ 16MB of memory.

You can get a rough estimate by running this: ps --no-headers -eo rss,comm | grep php | awk '{sum+=$1; count++} END {if (count > 0) print "Average Memory Usage (KB):", sum/count; else print "No PHP processes found."}'

In our case, we'd get something like this: 3500 / 16 = 218, meaning that we could run up to 218 processes. To keep things simple, let's round that to 200.

Now, although that makes sense, you might not necessarily have the best performance with the highest amount of processes: it depends on how I/O and CPU-bound your application is, particularities of each endpoint, etc.

For example, if CPU is the bottleneck, forking more processes won't do any good because the scheduler won't be able to properly switch between them.

There are many bottlenecks you might be hitting where simply adding more processes won't do the trick:

- Reaching max bandwidth for the server

- Hitting the maximum number of database connections

- Maximum number of TCP connections

- Disk I/O

- Webserver configuration

My recommendation is to benchmark with the default settings and monitoring CPU and memory usage.

In my tests for this post, I found the sweet spot with just 100 max processes, even though there was enough memory to handle over 200 processes. Here's the configuration I used:

1pm = dynamic2pm.max_children = 1003pm.start_servers = 704pm.max_spare_servers = 805pm.min_spare_servers = 60That allows fpm to start with a reasonable amount of processes, and if traffic happens to increase, it can fork new ones to handle it. In real life, you definitely want to rely on monitoring to measure the server load and whether you need more processes. This is just to get started.

Again, my suggestion is to play with it to kick things off, and then to keep an eye on monitoring to further adjust it. At some point, increasing the amount of processes might have the opposite effect — remember that forking processes is expensive, and switching between them is also expensive. Applications are also subject to latency, I/O ops, etc. Data is always your friend when it comes to infrastructure, and you're basically blind if you don't have access to that. Readjusting settings as traffic patterns/usage change is also key.

The Gist

So, here's what you want to do:

- Enable OPCache. That's the most important thing.

- Run some load testing and monitor your server. Utilize services like Cloudwatch and New Relic to monitor different resources on the server and spot bottlenecks.

- Adjust php-fpm pool settings to try and achieve the most usage of your server under load; keep in mind this might not necessarily mean trying to squeeze all of the RAM available in the machine.

- Test against different types of workloads in your application: I/O bound, CPU bound, etc.

- Use tools such as JMeter to properly load test your application

I highly suggest setting up a testing environment that looks exactly like production: same type of server, same database, same data (minus PII) and run tests there.

Play around with the FPM settings until you find the sweet spot. There' no recipe as every application is different.

Keep in mind processes are expensive, and for some applications, using something like Swoole or FrankenPHP is ideal, as those rely on an async, non-blocking I/O architecture, where you have fewer processes and instead rely on coroutines (which are cheap) to serve requests concurrently.

Laravel specific tips

If you're running Laravel, make sure to run php artisan optimize to cache views, config and route files.

Although this is unrelated to infrastructure, it'll make it a bit faster.

If you need high-performance, I suggest looking into Laravel Octane: Octane allows you to use alternative runtimes (such as Swoole and FrankenPHP) and treat Laravel as a long-running process, which means you no longer have to boot the framework on every request, making it significantly faster.